Elon Musk is renowned for his ambitious, often grandiose visions, and his tendency to announce groundbreaking technology far in advance. Yet, the timelines Musk sets for Tesla’s developments, particularly in autonomous driving, frequently go unmet, causing many to dismiss his claims as overly optimistic and unlikely to materialize. Nowhere is this more evident than in Tesla’s approach to Full Self-Driving (FSD), the company’s vision of an autonomous driving solution.

Elon Musk has been talking about autonomous driving since 2014 and there are many reports that have documented the many announcements of Tesla in detail.

Tesla: Not enough sensors?

Unlike nearly every other player in the field, Tesla has chosen a unique route: relying exclusively on low-cost camera technology as its sole sensor system, rather than supplementing with familiar aids such as lidar or radar. This approach has sparked widespread skepticism. Many industry experts believe that Tesla’s “Vision-Only” strategy is unlikely to succeed, especially given the significant hurdles involved in achieving spatial awareness through cameras alone. Meanwhile, companies like Waymo have taken a more conventional route, using a combination of high-cost, hardware-intensive solutions—such as lidar and radar—to address the challenges of autonomous driving. Waymo’s methods appear to have achieved more tangible progress, and its strategy is widely perceived as more reliable.

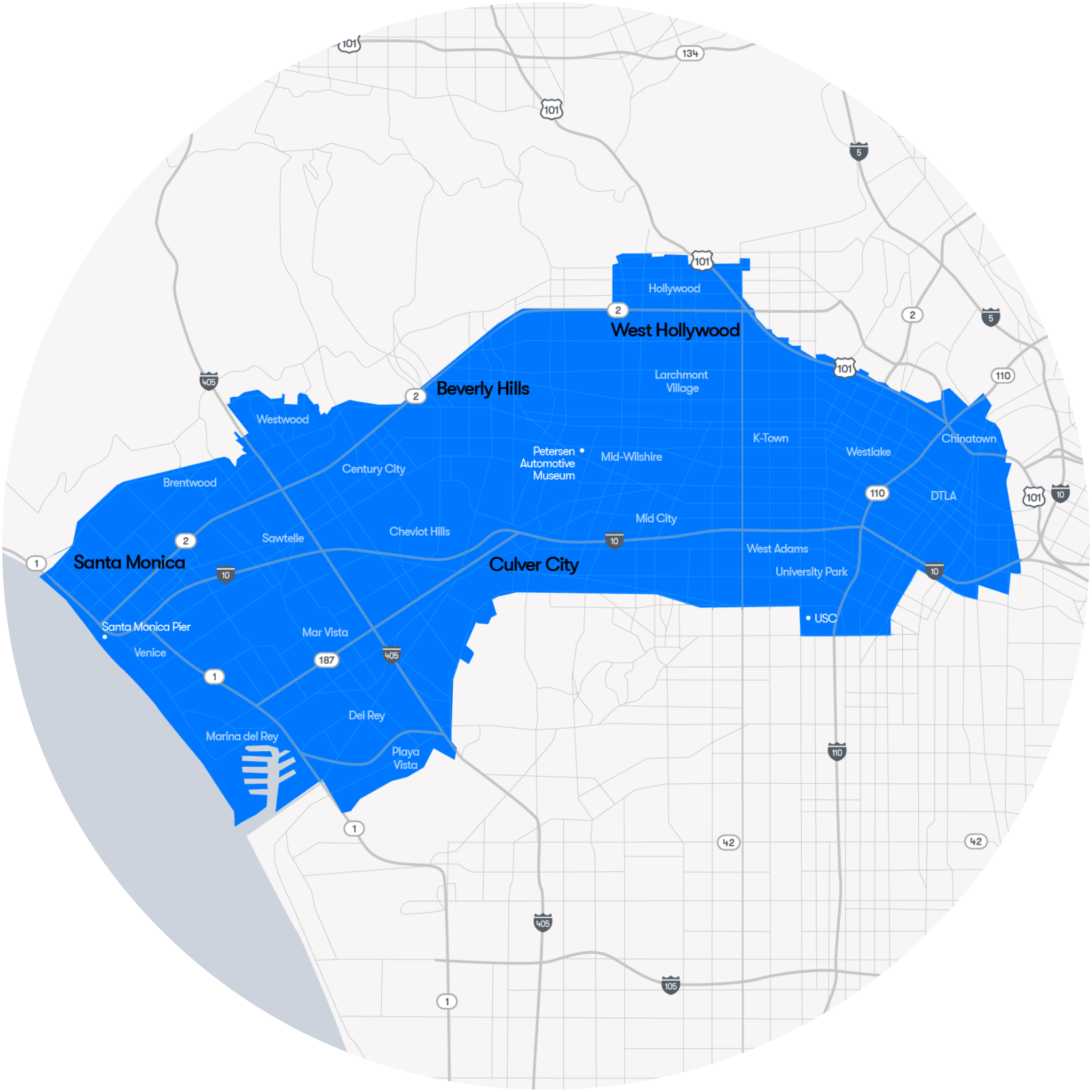

But does that mean Tesla’s approach is doomed? Not necessarily. Waymo, for instance, has successfully rolled out autonomous services but only on a very limited scale, currently operating with a fleet of just 700 vehicles. Waymo does not produce its own cars, instead retrofitting vehicles from other manufacturers (like Jaguar, Volvo, Mercedes, and Stellantis) with the necessary—and highly expensive—autonomous technology (estimates are well over $100,000, in addition to the vehicle and conversion costs). This approach, while effective, is cost-intensive and hard to scale. In addition to that its navigation relies on regular updates of meticulous and precise maps. Consequently, Waymo’s service remains restricted to only a handful of cities, including “parts of” San Francisco, Phoenix, Los Angeles, and Austin.

The vision problem: It’s all about AI

This limitation highlights why Tesla’s vision-only strategy is not as far-fetched as it may initially appear. By equipping each Tesla vehicle with inexpensive cameras installed directly in the factory—unlike other manufacturers, who intstall autonomous technology only to order—Tesla aims to achieve autonomous driving without adding substantial extra costs per vehicle. This setup allows for remarkable scalability and cost-efficiency. However, there is a clear trade-off: instead of using additional sensors to enhance spatial awareness, Tesla must train its AI to interpret complex environments based solely on visual data, much like a human driver. This approach seeks to emulate the way humans rely on vision and intelligence to navigate the road. Ultimately, it’s not the number of sensors that determines success, but the depth of understanding a system brings to every moment on the road.

Just as humans primarily depend on what we see with our eyes, Tesla’s AI must learn to interpret and understand visual information to handle challenging situations. For instance, when driving in poor visibility or on snowy roads, humans can intuitively anticipate where to go next, even when visibility is reduced or road features are obscured. It’s not simply about detecting obstacles; it’s about recognizing what we see and knowing the appropriate action to take, almost instinctively. It also includes understanding interpersonal communication, even if this can sometimes only consist of gestures.

This is precisely what Tesla is aiming to achieve: an AI that not only “sees” but also comprehends its surroundings well enough to drive safely, even under adverse conditions. The ambition here is profound. If Tesla’s approach succeeds, its vehicles could navigate even in areas without detailed map data, such as unpaved roads, gravel paths, or places where the physical environment diverges from map records.

Achieving this, however, requires solving a formidable AI problem, one far more challenging than conventional methods that use lidar and other supplemental sensors. Ultimately, it is a solvable problem. The only question that remains is: when will this vision become a reality? Tesla has been promising autonomous driving for years, and although the road has been long, recent advances suggest that the company might finally be on the verge of delivering on its FSD ambitions.

Scaling Up AI: Tesla’s Massive Computing Push

One indicator that Tesla is closer to realizing its vision is its rapid buildout of AI computing resources. In just a few months, Tesla created one of the largest AI GPU clusters in existence, powered by 100,000 Nvidia GPUs. This cluster, dubbed “Colossus,” is essential for Tesla’s AI to process the immense volumes of visual data necessary to drive autonomously. Remarkably, Tesla plans to double Colossus to 200,000 GPUs and may even expand to 300,000 in the future. Typically, constructing a supercomputer of this magnitude takes 18 to 24 months, but Tesla managed to fast-track it in a fraction of that time (4 months)—a testament to its commitment, urgency and capability in execution in advancing FSD.

This surge in computing power allows Tesla’s neural networks to process data faster and more effectively, which is crucial for refining the AI algorithms behind FSD. At the same time, Tesla has the largest fleet of vehicles (every Tesla produced since 2019), which diligently collect valuable data on a daily basis to train the algorithms of the beast. Such advances have fueled a series of software improvements, culminating in the release of Tesla’s Full Self-Driving Version 13, expected to reach customers by the end of November 2024.

Tesla’s FSD Advancements: The Road to Unsupervised?

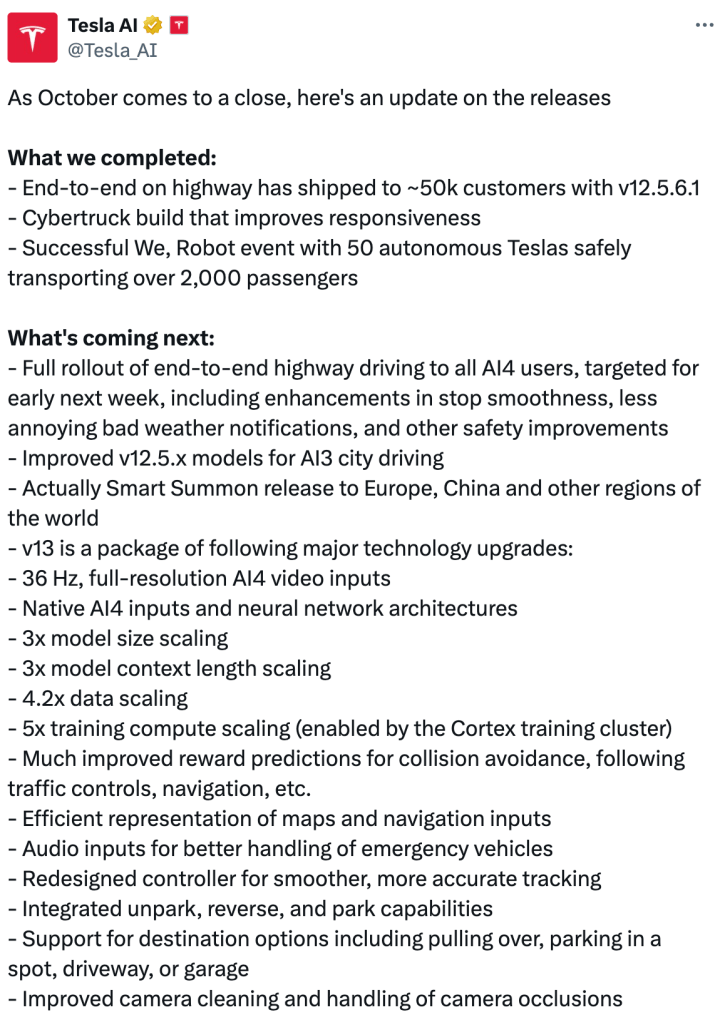

In a recent post on X, Tesla AI highlighted the latest FSD progress and announced the next improvements by the end of this month. The list is truly remarkable.

Already, 50,000 customers have experienced Tesla’s “end-to-end” highway driving feature, which enables the car to manage all aspects of driving from highway entry to exit. This functionality, released with Version 12.5.2.1, is expected to roll out to Tesla vehicles by early November 2024, with enhancements in stop smoothness, reduced weather-related notifications, and other safety features.

Further developments include the “Smart Summon” feature, allowing the vehicle to navigate through parking lots autonomously to reach the owner, which will soon be available in Europe, China, and other global markets.

The next big milestone is Version 13, which introduces major technological upgrades across the FSD system.

FSD 13 key features explained:

- 36 Hz Full-Resolution AI4 Video Inputs: The cameras now capture images 36 times per second, allowing the car to process visual information faster and in greater detail. This increased frame rate helps the vehicle better understand and react to its surroundings with more accuracy.

- Native AI4 Inputs and Neural Network Architectures: The AI system, or the “brain” of the car, now works more naturally with its cameras. This means the system interprets the images directly, allowing it to react faster and more precisely.

- 3x Model Size Scaling: The neural network has grown significantly, meaning it now has more “knowledge” about driving and can recognize and handle a wider range of scenarios, making it more capable in complex driving situations.

- 3x Model Context Length Scaling: The AI can now “remember” details for three times as long, helping it to make better decisions based on a longer history of what it has seen recently, which improves its understanding of ongoing driving events.

- 4.2x Data Scaling: The car’s neural network now learns from over four times more examples than before, which helps it to understand and adapt to a broader range of driving scenarios, making it smarter and more flexible on the road.

- 5x Training Compute Scaling (Enabled by the Cortex Training Cluster): The car’s learning process is now five times faster, powered by a supercomputer that accelerates how quickly the AI can improve and adapt, helping Tesla refine FSD more rapidly.

- Enhanced Collision Avoidance and Traffic Control Compliance: The system’s “reward predictions” have been improved, helping it better anticipate safe driving actions. This means it’s better at avoiding potential collisions, responding to traffic signals, and navigating more accurately.

- Efficient Map and Navigation Data Processing: The system now interprets map information more efficiently, allowing the car to navigate with greater awareness of upcoming turns, road conditions, and potential obstacles.

- Audio Inputs for Emergency Vehicle Detection: The car can now “hear” sirens from emergency vehicles, allowing it to respond appropriately by making way, as a human driver would.

- Redesigned Control System for Smoother Tracking: Improvements to the car’s steering, braking, and acceleration provide a smoother and more accurate driving experience, following roads and traffic patterns with a high degree of precision.

- Integrated Parking and Reversing Capabilities: The car can now perform complex parking maneuvers, such as three-point turns, backing into parking spots, or even self-parking, making parking more convenient and automated.

- Support for Destination Options, Including Pulling Over or Parking: The system can navigate to specific spots like driveways, garages, or designated parking spaces, offering flexibility based on the driver’s needs.

- Improved Camera Cleaning and Handling of Occlusions: Enhanced handling of camera obstructions, like rain or dirt, ensures the car maintains clear visibility and reliable performance in various weather conditions.

With these updates, Tesla’s FSD system is designed to become more adaptable, intelligent, and responsive, bringing it closer to fulfilling the autonomous driving promise.

The Potential for Domination

If Tesla succeeds in deploying fully autonomous vehicles without the need for human supervision, it would represent a monumental shift in the autonomous driving industry. By equipping millions of cars with FSD-capable hardware since 2019, Tesla has uniquely positioned itself to achieve massive scalability at a fraction of the cost of traditional autonomous driving systems. Unlike competitors that manufacture and retrofit autonomous technology to order, Tesla has already installed its camera-based FSD technology in all cars built since 2019, positioning it to instantly activate a fleet of millions of autonomous-capable vehicles.

This ready-made scalability gives Tesla a huge competitive advantage, and in relation to become a possible provider of autonomous cab rides: it is the only company capable of producing millions of robotaxis at a cost of less than $30,000 per vehicle. With its rapid production pace, advanced AI, and swift software development, Tesla outpaces competitors and could redefine autonomous driving globally—when “Vision Only” becomes more than just a vision.

Another 5 cents.

//Alex